Abstract

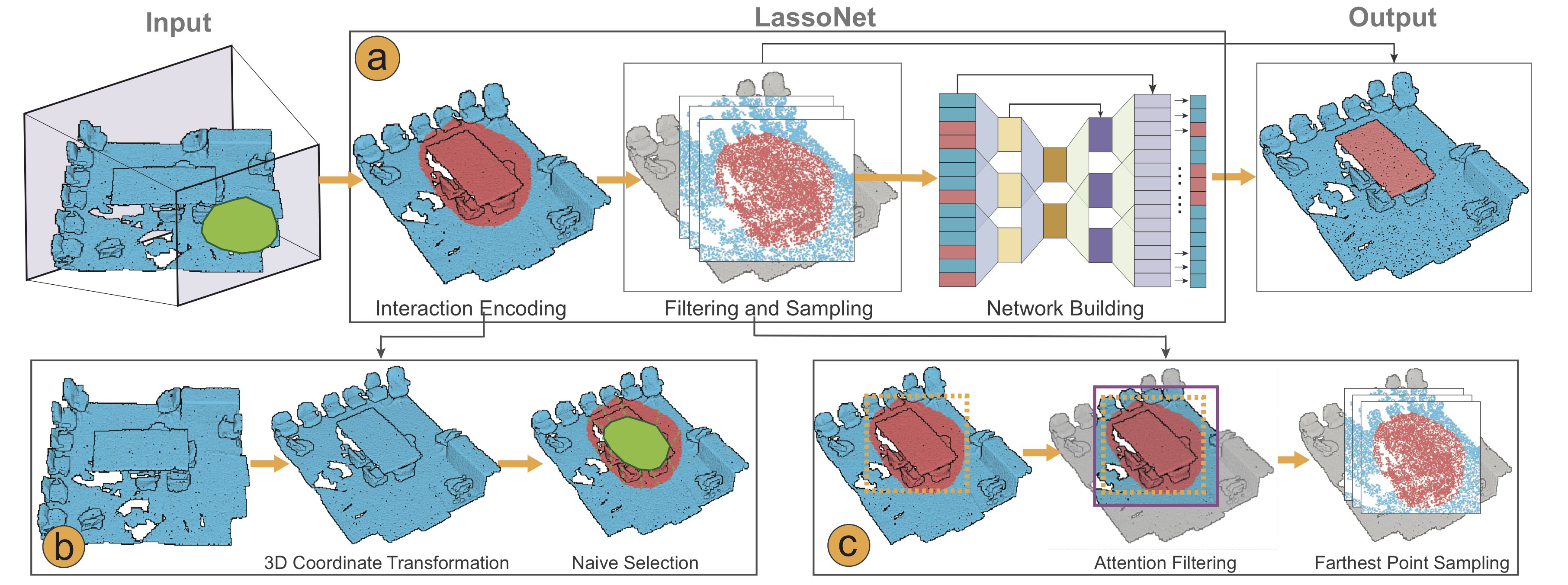

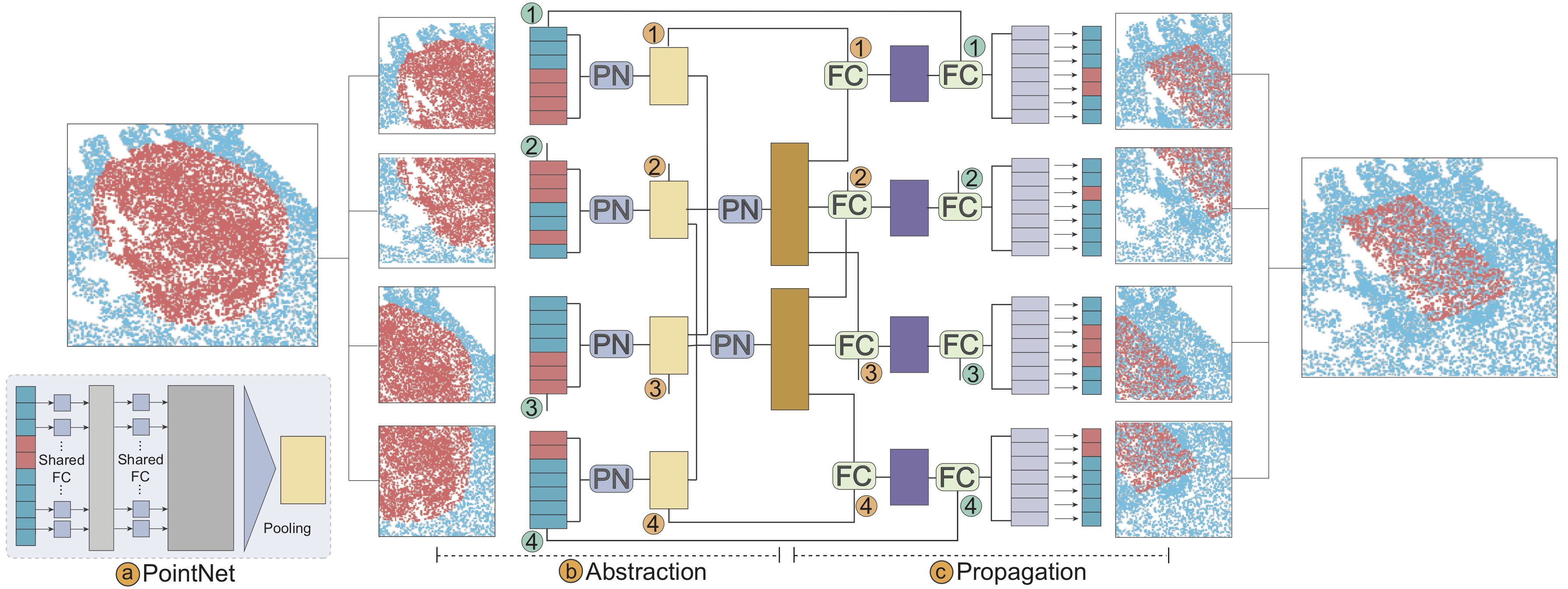

Selection is a fundamental task in exploratory analysis and visualization of 3D point clouds. Prior researches on selection methods were developed mainly based on heuristics such as local point density, thus limiting their applicability in general data. Specific challenges root in the great variabilities implied by point clouds (e.g., dense vs. sparse), viewpoint (e.g., occluded vs. non-occluded), and lasso (e.g., small vs. large). In this work, we introduce LassoNet, a new deep neural network for lasso selection of 3D point clouds, attempting to learn a latent mapping from viewpoint and lasso to point cloud regions. To achieve this, we couple user-target points with viewpoint and lasso information through 3D coordinate transform and naive selection, and improve the method scalability via an intention filtering and farthest point sampling. A hierarchical network is trained using a dataset with over 30K lasso-selection records on two different point cloud data. We conduct a formal user study to compare LassoNet with two state-of-the-art lasso-selection methods. The evaluations confirm that our approach improves the selection effectiveness and efficiency across different combinations of 3D point clouds, viewpoints, and lasso selections.Pipeline and Network Architecture

Dataset

| Dataset | #Point Clouds | #Targets | #Records |

|---|---|---|---|

| ShapeNet | 2,332 | 6,297 | 19,432 |

| S3DIS | 2,72 | 4,018 | 12,944 |

The table presents statistics of lasso-selection records. In total, we have collected 19,432 lasso-selection records for 6,297 different parts of target points in ShapeNet point clouds, and 12,944 records for 4,018 different parts of target points in S3DIS point clouds.

The dataset can be downloaded from our github repo>.

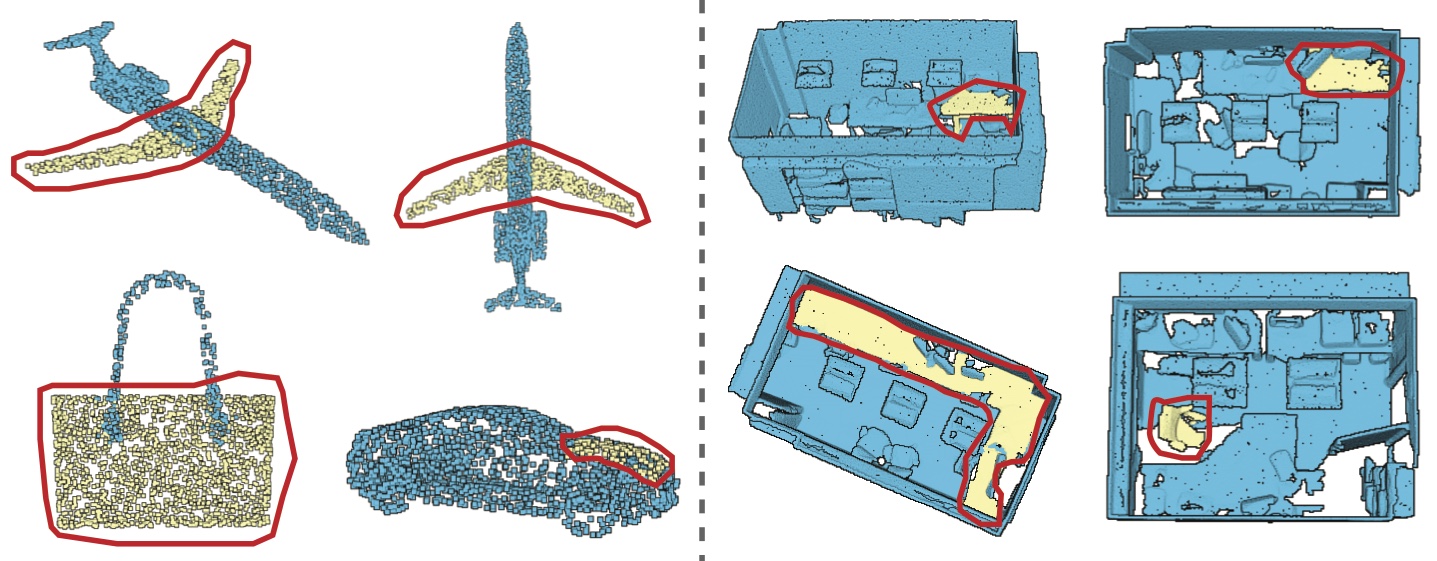

Examples

Reference

Charles R. Qi, Hao Su, Kaichun Mo, Leonidas J. Guibas. "PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation." In Proc. IEEE CVPR. 2017.